Metrics That We Measure - Measuring Efficacy of Your Machine Learning Models

Posted October 30, 2021 by Gowri Shankar ‐ 7 min read

Have we identified the perfect metrics to measure the efficacy of our machine learning models? A perfect metric - does that even exist. A recent feed from LinkedIn on measuring metrics caught my attention, It is a bit opinionated claim from the author with substantial shreds of evidence and arguments. His post drew attention from many and made it a valuable repository of information and views from diverse people. This post summarizes diverse responses from the participants of the post.

Nikhil Aggarwal from Google made the post that drew my attention and it can be accessed here

The F1 score is one of the most widely used but misunderstood metrics.

In real-life scenarios, I hardly find a use case where the cost of a

false positive is equal to a false negative. We can’t give equal weightage

to recall and precision. Fbeta is more appropriate where the value of beta

changes based on business needs and problem statements.

- Nikhil Aggarwal, Google

I thank Nikhil and the LinkedIn community who participated in the discussion for inspiring me to write this post.

Objective

The key objective of this post is to catalog critical comments made by the participants of Nikhil Aggarwal’s post on $F_1$ vs $F_{beta}(F_2)$ and the cost of False Positives vs False Negatives.

Introduction

Is accuracy the right metric to evaluate the efficacy of our machine learning models? If not then why do the most qualified and accomplished professionals start the conversation by asking How accurate is your model? Truth, the term accurate has nothing to do with the accuracy metric - I believe they mean to ask, Have you identified the right metrics to evaluate and what is the efficacy of your model? Disclaimer: They may or may not know the right metrics and their significance to the context of the problem. Meanwhile, the question How accurate is your model? is destined to remain in its glory for years to come - It is that question so significant that holds the truth of all evaluation metrics that we have ever invented and to invent in the future.

Academia or Industry

Context is the King and Medium is the Message - In data science, there is no golden rule or clearly defined goal to achieve. Hence the context plays a crucial role in glorifying or cremating an idea. Dr. Andrew Ng in his recent email to his followers detailed the differences between academia and industry for the data science professionals who are transitioning. Following are the measures that one need to keep in mind based on the nature of his/her organization - i.e Academia or Industry

- Speed vs Accuracy

- Return of Investment vs Novelty

- Experiences vs Junior Teams

- Interdisciplinary Work vs Disciplinary Specialization

- Top-down vs Bottom-up Management

If one looks closely at the above list, they all are either quantitative or qualitative metrics for the success of a professional in the context of the organization he/she represents. i.e There is no hard and fast rule when it comes to data science because there is more than one right answer for most of the problems.

Metrics Mislead

Machine learning is not a deterministic process, the machine learning outcomes are stochastic because of the lack of information about all the confounders. Hence the metrics that work today may or may not be valid tomorrow - for a simple reason called data drifts. After all, we rely upon and measure the density of a predictor to predict which is dynamic as time moves.

the statistical component of your exercise ends when you output a probability

for each class of your new sample. Mapping these predicted probabilities

(p^,1−p^) to a 0-1 classification, by choosing a threshold beyond which you

classify a new observation as 1 vs. 0 is not part of the statistics any more.

It is part of the decision component

- Venkat Raman, Aryma Lab

Assumptions made because of a context from the business that influences the outcome are biases. The context often does not come from the data but some sort of an inductive bias to the system. This bias results in being judgemental about an idea and ends up in sub-optimal results. Hence an evaluation metrics is a mere indicator of where the data lean towards rather than the autocratic authority who is destined to make one’s decisions.

Classic Case of Credit Risk

Is it possible for a machine learning model to predict the credit risk of a potential borrower accurately? There are two cases to thoughts through

- Loan approval for a potential defaulter (False Negative)

- Loan rejection for a potential customer (False Positive)

We generally use custom metrics built on top of recall, because

if we weed out all risky customers we're losing money in terms

of interest and late.

- Kriti Doneria, Kaggle Master

From a business point of view cost of the uncertainty of whether a customer is a defaulter or not is less than the interest and late payments from risky customers, claims Manjunatha. It is quite evident from this section of the post - A machine learning algorithm and its evaluation metrics are in the nascent stages where they can’t make decisions independently.

The Metrics

In this section, we shall revise the common metrics that we often use to evaluate our machine learning models

Accuracy

To scribe in words, “How good is the classification model?” $$\frac{True \ Positives + True \ Negatives}{Total \ No. \ of \ Samples}$$

Confusion Matrix

A confusion matrix is used to describe the performance of a binary classification model. There are four basic terms to be pondered

- True Positives: These are the samples predicted correctly

- True Negatives: Predicted as FALSE but they are FALSE

- False Positives or Type 1 Error: Predicted as TRUE but they are FALSE

- False Negatives or Type 2 Error: Predicted as FALSE but they are TRUE

| Actual Positive | Actual Negative | |

|---|---|---|

| Predicted Positive | True Positives | False Positives |

| Predicted Negative | False Negatives | True Negatives |

Precision

To scribe in words, “When it predicts TRUE, how often the model is correct”

$$\frac{True \ Positives}{True \ Positives + False \ Positives}$$

Recall(or Sensitivity/TPR)

To scribe in words, TPR is “If it is TRUE, how often the model predicts TRUE”

$$\frac{True \ Positives}{True \ Positives + False \ Negatives}$$

$F_1$ Score

$F_1$ Score is the weighted average of the true postive rate(recall) and precision

$$2 * \frac{Precision \times Recall}{Precision + Recall}$$

$F_{\beta}$ Score

$F_{\beta}$ is a generalized measure of $F_1$ score with additional weights by valuing either precision or recall more than the other. $$F_{\beta} = (1 + \beta^2) . \frac{precision.recall}{(\beta^2 . precision) + recall}$$

True Positive Rate (TPR or Recall or Sensitivity)

To scribe in words, TPR is “If it is TRUE, how often the model predicts TRUE”

$$\frac{True \ Positives}{True \ Positives + False \ Negatives}$$

False Positive Rate (FPR)

To scribe in words, FPR is “When it is FALSE, how often the model predicts TRUE”

$$\frac{False \ Positives}{False \ Positives + True \ Negatives}$$

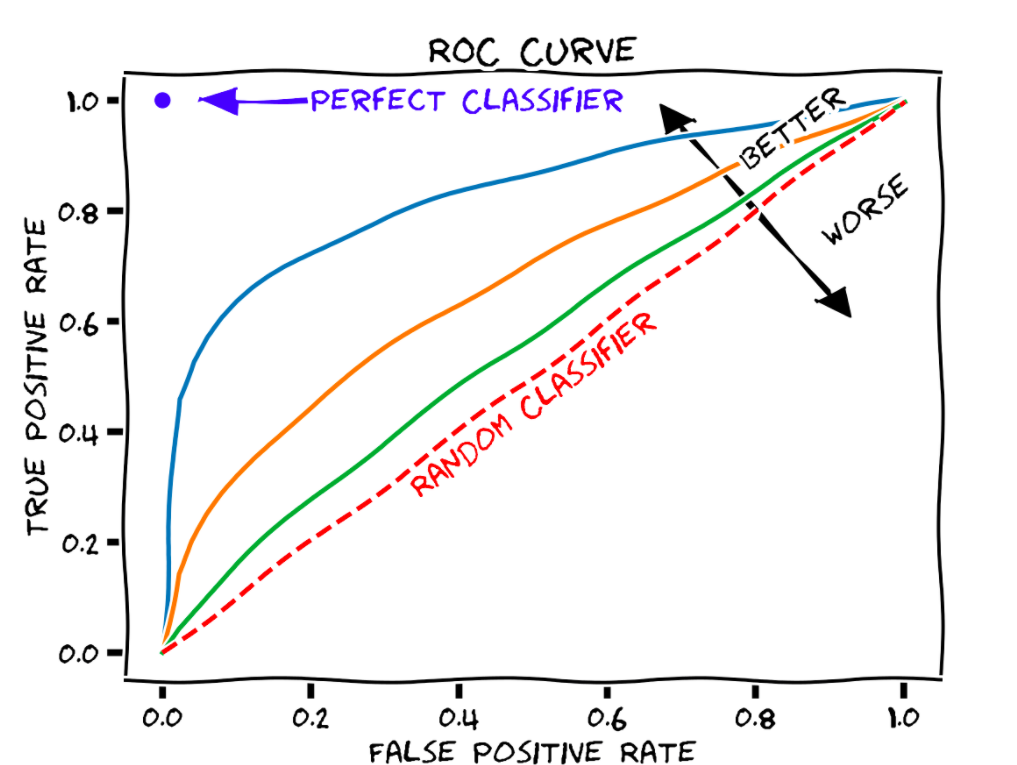

ROC Curve

ROC stands for Receiver Operator Characteristics, ROC curves are used to present results of binary decision problems.

- ROC curves show the number of correctly classified positive samples varies with the number of incorrectly classified negative examples. ie

$$False \ Positive \ Rate(FPR) \ vs \ True \ Positive \ Rate(TPR)$$

- ROC Curves can present an overly optimistic view of an algorithm’s performance

- In ROC space, the goal is to be in the upper left-hand corner

Other Metrics

The above-discussed metrics are the most common evaluation metrics used for classification problems, there is an extensive list of metrics specific to the problem of interest. Following are a few to name,

- Concordant - Discordant Ratio, Pair Ranking

- Gini Coefficient - Degree of Variation

- Kolmogorov Smirnov Chart - Distance between Distributions

- Gain and Lift Charts - Classification

- Word Error Rate (WER) - Speech

- Perplexity - NLP

- BLEU Score - NLP

- Intersection over Union (IoU) - Computer Vision

- Inception Score - GANs

- Frechet Inception Distance - GANs

- Peak Signal to Noise Ratio (PSNR) - Image Quality & Reconstruction

- Structural Similarity Index(SSIM) - Image Quality and Reconstruction

- Mean Reciprocal Rank(MRR) - Ranking

- Discounted Cumulative Gain(DCG) - Ranking

- Normalized Discounted Cumulative Gain(NDCG) - Ranking

Conclusion

Nikhil’s post triggered many to give their views, brought many people of diverse domains together to give their opinions and ideas. For a simple classification problem, the most meaningful and reliable measure could be AUC-ROC. I noticed almost all research papers in the healthcare domain conclude with one or another flavor of the ROC curve. Beyond that leaning towards any particular metric is not advised.

This is why no ONE metric makes sense for all use cases. The outcomes and predictions need to be evaluated based on their costs/benefits.

- Phil Fry

Research from an academic setup and Industry are equally important. Though the context and objectives are different in academia and industry, our end goal is to build superior human-like, human-inspired, reliable, and responsible expert systems for the greater good.

Few Critical Reads

- Damage Caused by Classification Accuracy and Other Discontinuous Improper Accuracy Scoring Rules by Frank Harrell, 2020

- Of quantiles and expectiles: consistent scoring functions, Choquet representations and forecast rankings by Ehm et al, 2016

- Biostatistics for Biomedical Research by Harrell and Slaughter of Vanderbilt Univ, 2021

- Machine Learning Meets Economics by Nicholas Krutchen, 2016

metrics-that-we-measure-measuring-efficacy-of-your-machine-learning-models