Istio Service Mesh, Canary Release Routing Strategies for ML Deployments in a Kubernetes Cluster

Posted October 16, 2021 by Gowri Shankar ‐ 13 min read

Change is the only constant thing in this universe. Our data changes and cause data drift then the understanding of the nature change and cause concept drift. However, we believe building State of the Art(SOA), One of a Kind(OAK), and First, of its Time(FOT) in-silico intelligence will achieve a nirvana state and juxtapose us next to the hearts that are liberated from the cycle of life and death. Constructing a model is just the end of the inception, real trials of difficulty and the excruciating pain of managing changes are awaiting us. Shall we plan well ahead by having a conscious focus on a minimum viable product that promises a quicker time to market with a fail-fast approach? Our ego doesn't allow that because we do not consider software development is cool anymore, we believe building intelligence alone makes us deserving our salt. Today anyone can claim themselves a data scientist because of 2 reasons. Until 2020 we wrote SQL queries for existence. It is 2021 - Covid bug bit and mutated us, we survived variants and waves that naturally upgraded the SQL developer within to a data scientist(evolutionary process). Reason 2 - With all due respect to one man Dr.Andrew Ng, with his hard work and perseverance, made us believe we are all data scientists. By the way, they say ignorance is bliss and we can continue building our SOA, OAK, and FOT models forever at the expense of someone's cash. BTW, Anyone noticed Andrew is moving away from the model-centric AI to the data-centric AI - He is a genius and he will take to the place we truly belong.

In this post, I would like to pitch in a few critical concepts on model serving and deployment for making robust machine learning releases/upgrades. It also carries a practical guide for balancing the load and routing schemes to experiments on a subset of users in the production environment. This is the first post on MLOps where we study a few tools and technologies for rapid and continuous deployment.

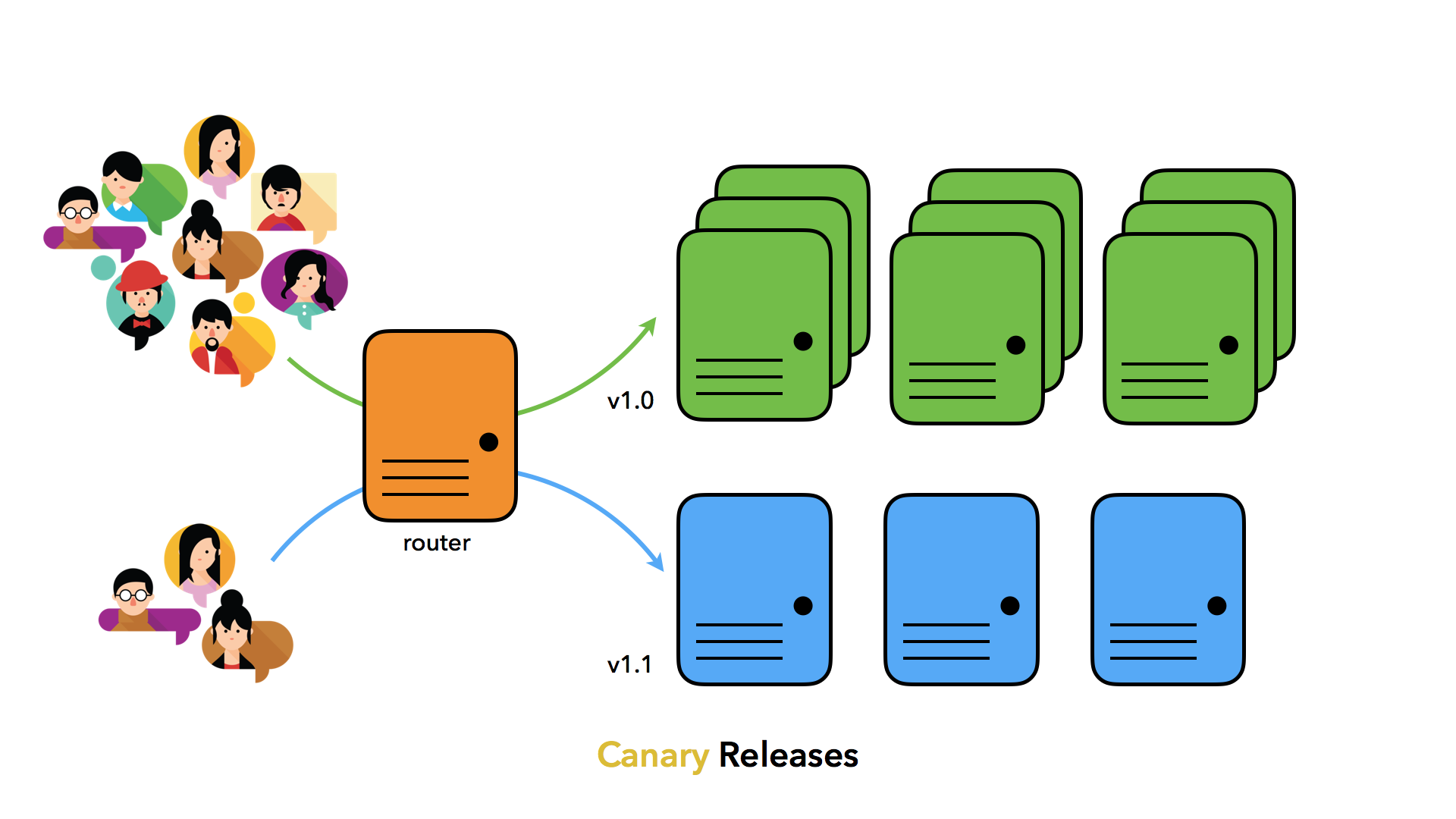

- Image Credit - Using Canary Release Pipelines to Achieve Continuous Testing

This post is inspired by GCP guide for MLOps focusing canary deployment, refer here

Objective

The prime objective of this post is to understand the Canary model serving strategy for deploying machine learning models, In that quest, we shall learn the following

- Prepare a Google Kubernetes Engine(GKE) cluster

- Istio service mesh

- Deploying models using TF Serving

- Configuring Istio Ingress gateway, services, and rules

- Configuring weight and content-based traffic routing strategies

Introduction

Our models are nothing but the manifestation of the data that we have provided to make meaning out of a confined universe. This universe is continually changing for a simple reason we cannot estimate for all the confounders, which leads to drifts from the initial assumptions and presumptions we made. However, the changes can be monitored through the statistical properties of the features accounted, predictions made from those features, and their correlation quotients. Model drift refers to degradation of performance of the model due to the change in the universe, these changes are caused by one or either of the following drifts,

Data Drift:

A change in the measure of distribution is a clear indicator of data drift. For e.g, a marketing campaign for a particular product is targetted among the teens of average age 18 resulted from a loss of revenue from the adult group. Then a refocus of the target audience is suggested.Concept Drift:

Concept drift is a change in our understanding of the confounders. For e.g for an alcoholic beverage company, the potential customer base is of age 18 and above. However, the federal government decides to change the legal age limit for consuming alcohol to 25. Then the age group between 18 and 25 completely goes out of the consideration in the recommendation system.

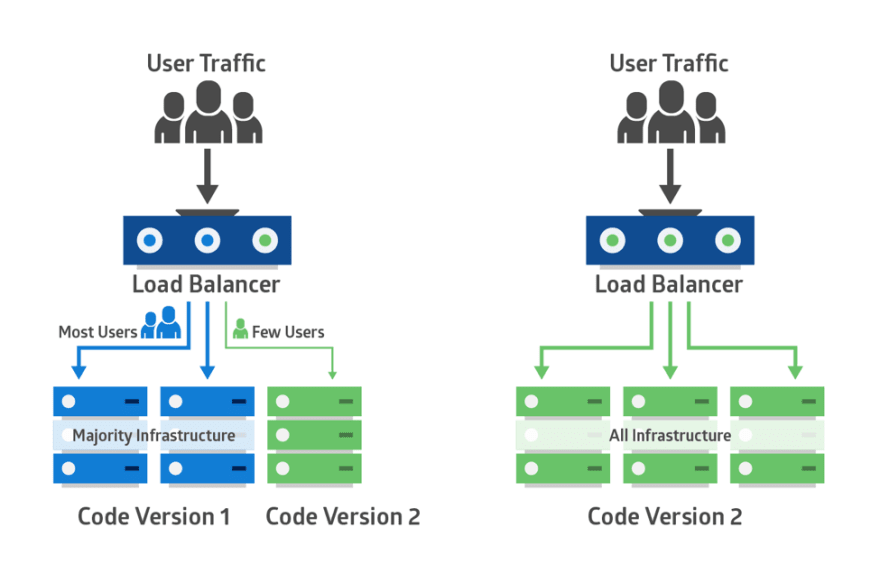

Canary Deployment Strategy

During the olden days, coal miners used a simple tactic to gauge the toxic gases by sending canaries before they step into the mines. This risk reduction strategy inspires us in software deployment and the upgrade process by assigning a subset of users to the new deployment. i.e Whenever there is an upgrade, a portion of the users are allowed to use the new pathways, and the rest of the traffic is sent to the stable deployment. Once the stability of the new release is confirmed, other users are brought into the new release gradually or in one shot.

GKE Cluster

Google Cloud developed Kubernetes and open-sourced it in 2014 and GKE is their fully managed environment that leverages the simplicity of PaaS and utilizes the flexibility of IaaS. The following video explains GKE in detail.

This guide uses the GKE cluster to deploy our machine learning models.

from IPython.display import YouTubeVideo

YouTubeVideo('Rl5M1CzgEH4', width="100%")

Istio Service Mesh

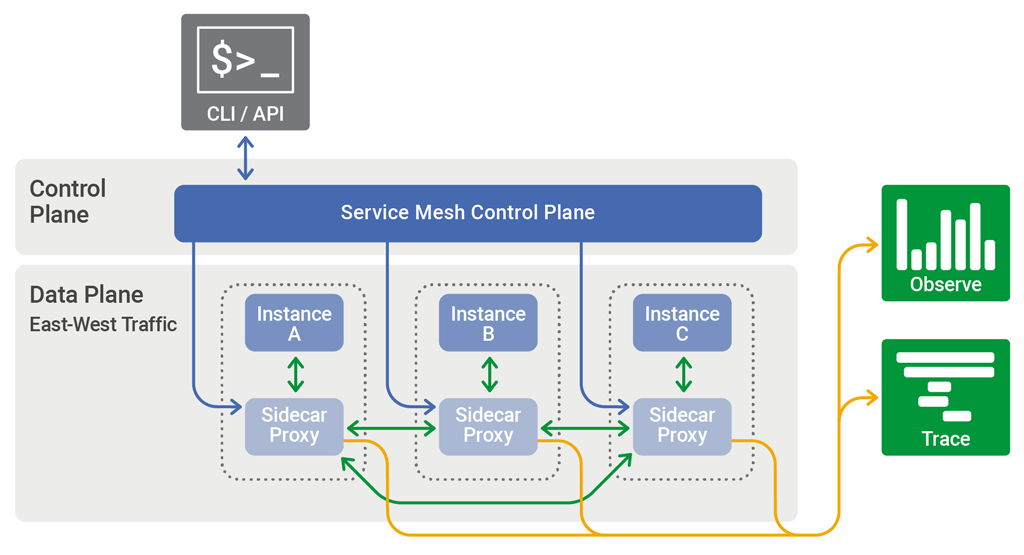

A service mesh is a networking layer that provides transparent and dedicated infrastructure for service-to-service communication between services or micro-services, using a proxy.

A service mesh consists of network proxies paired with each service in an application

and a set of task management processes. The proxies are called the data plane and the

management processes are called the control plane. The data plane intercepts calls

between different services and “processes” them; the control plane is the brain of the

mesh that coordinates the behavior of proxies and provides APIs for operations and

maintenance personnel to manipulate and observe the entire network

- Wikipedia

This guide uses Istio as a service mesh to expose the deployed models as microservice. Using Istio we can easily manage the Kubernetes services and expose them to potential consumers.

YouTubeVideo('8oLX5P4ctmY', width="100%")

TF Serving

There is one area where Tensorflow is undoubtedly superior compared with its competitors(especially PyTorch) is model serving. TF Serving is Tensorflow’s flexible, high-performance serving system for machine learning models designed considering the needs of the production environment. We are all familiar with SavedModel format that packages a complete TF program including trained parameters and computation. i.e It does not require the code that is used for building the model.

YouTubeVideo('4mqFDwIdKh0', width="100%")

GKE Cluster, Canary Deployment and Routing Strategies

In this section, we shall make a canary deployment on a GKE cluster with Istio service-to-service communication layer in a step-by-step manner. Following are the task we will accomplish by the end of this section.

- Activate Cloud Shell

- Create a GKE Cluster

- Install Istio package

- Deploy ML models using TF Serving

- Configure Istio Ingress Gateway

- Configure Istio Virtual Services

- Configure Istio Service Rules

- Configure weight-based routing

- Configure content-based routing

To do this in vivo, you need a Google Cloud account and a project.

All instructions and the configuration files(*.yaml) can be found here

Activate Cloud Shell and Get Sources

GCloud provides Cloud Shell free of cost and it can be activated by clicking the “Activate Cloud Shell” button in the developer console. Cloud shell also provides a free editor, a VS code server instance for code development.

Authorize Cloud Shell

gcloud auth list

Credentialed Accounts

ACTIVE: *

ACCOUNT: *************@gmail.com

To set the active account, run:

$ gcloud config set account `ACCOUNT`

gcloud config set account *****@@gmail.com

- Updated property [core/account].

Get Source Files From GCloud Repo.

kpt pkg get https://github.com/GoogleCloudPlatform/mlops-on-gcp/workshops/mlep-qwiklabs/tfserving-canary-gke tfserving-canary

Package "tfserving-canary":

Fetching https://github.com/GoogleCloudPlatform/mlops-on-gcp@master

From https://github.com/GoogleCloudPlatform/mlops-on-gcp

* branch master -> FETCH_HEAD

* [new branch] master -> origin/master

Adding package "workshops/mlep-qwiklabs/tfserving-canary-gke".

Fetched 1 package(s).

cd tfserving-canary

Creating GKE Cluster

Update compute zone, set the project id and cluster name

gcloud config set compute/zone us-central1-f

PROJECT_ID=$(gcloud config get-value project)

CLUSTER_NAME=canary-cluster

Create GKE Cluster with Istio Add On

gcloud services enable container.googleapis.com

gcloud beta container clusters create CLUSTER_NAME

–project=PROJECT_ID

–addons=Istio

–istio-config=auth=MTLS_PERMISSIVE

–cluster-version=latest

–machine-type=n1-standard-4

–num-nodes=2

WARNING: Currently VPC-native is the default mode during cluster creation for versions greater than 1.21.0-gke.1500. To create advanced routes based clusters, please pass the `--no-enable-ip-alias` flag

WARNING: Starting with version 1.18, clusters will have shielded GKE nodes by default.

WARNING: Your Pod address range (`--cluster-ipv4-cidr`) can accommodate at most 1008 node(s).

WARNING: Starting with version 1.19, newly created clusters and node-pools will have COS_CONTAINERD as the default node image when no image type is specified.

Creating cluster canary-cluster in us-central1-f...done.

Created [https://container.googleapis.com/v1beta1/projects/vf-core-1/zones/us-central1-f/clusters/canary-cluster].

To inspect the contents of your cluster, go to: https://console.cloud.google.com/kubernetes/workload_/gcloud/us-central1-f/canary-cluster?project=vf-core-1

kubeconfig entry generated for canary-cluster.

NAME: canary-cluster

LOCATION: us-central1-f

MASTER_VERSION: 1.21.4-gke.2300

MASTER_IP: 35.239.142.56

MACHINE_TYPE: n1-standard-4

NODE_VERSION: 1.21.4-gke.2300

NUM_NODES: 2

STATUS: RUNNING

If you bump into any quota issues, check this and this

Verify the Cluster

gcloud container clusters get-credentials $CLUSTER_NAME

Verify the Istio Services

kubectl get service -n istio-system

> NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

> istio-citadel ClusterIP 10.92.11.95 8060/TCP,15014/TCP 3m13s

> istio-galley ClusterIP 10.92.4.110 443/TCP,15014/TCP,9901/TCP 3m13s

> istio-ingressgateway LoadBalancer 10.92.14.82 34.123.5.65 15020:32067/TCP,80:31368/TCP,443:30274/TCP,31400:31329/TCP,15029:31974/TCP,15030:31896/TCP,15031:32040/TCP,15032:31023/TCP,15443:30193/TCP 3m12s

> istio-pilot ClusterIP 10.92.13.129 15010/TCP,15011/TCP,8080/TCP,15014/TCP 3m12s

> istio-policy ClusterIP 10.92.14.95 9091/TCP,15004/TCP,15014/TCP 3m11s

> istio-sidecar-injector ClusterIP 10.92.5.237 443/TCP,15014/TCP 3m11s

> istio-telemetry ClusterIP 10.92.5.152 9091/TCP,15004/TCP,15014/TCP,42422/TCP 3m11s

> istiod-istio-1611 ClusterIP 10.92.12.29 15010/TCP,15012/TCP,443/TCP,15014/TCP,853/TCP 89s

> prometheus ClusterIP 10.92.4.8 9090/TCP 89s

> promsd ClusterIP 10.92.2.59 9090/TCP 3m11s

> **Verify the Kubernetes Pods and Containers are Deployed and Running**

> kubectl get pods -n istio-system

> NAME READY STATUS RESTARTS AGE

> istio-citadel-76685f699d-cgrsw 1/1 Running 0 6m43s

> istio-galley-58d48bcb98-4cds6 1/1 Running 0 6m43s

> istio-ingressgateway-5fb67c59c4-vpq5f 1/1 Running 0 6m43s

> istio-pilot-dc6499cf7-t5kxq 2/2 Running 0 6m42s

> istio-policy-676cd7984-v6jfd 2/2 Running 2 6m42s

> istio-security-post-install-1.4.10-gke.17-ngldz 0/1 Completed 0 6m11s

> istio-sidecar-injector-6bcb464d69-255wf 1/1 Running 0 6m42s

> istio-telemetry-75ff96df6f-qswvt 2/2 Running 2 6m42s

> istiod-istio-1611-8859565d6-lswrk 1/1 Running 0 5m2s

> prometheus-7bd69d7dd-vxdxw 2/2 Running 0 5m2s

> promsd-6d88cd87-9pjpr 2/2 Running 1 6m41s

> **Configuring Automatic Sidecar Injection**

Pods in the Istio mesh run as a sidecar proxy to take full advantage of its capabilities. More info here

kubectl label namespace default istio-injection=enabled

Model Deployment

Acquire the SavedModel Files

export MODEL_BUCKET={PROJECT_ID}-bucket gsutil mb gs://{MODEL_BUCKET}

gsutil cp -r gs://workshop-datasets/models/resnet_101 gs://{MODEL_BUCKET}

Copying gs://workshop-datasets/models/resnet_101/1/saved_model.pb [Content-Type=application/octet-stream]...

Copying gs://workshop-datasets/models/resnet_101/1/variables/variables.data-00000-of-00001 [Content-Type=application/octet-stream]...

Copying gs://workshop-datasets/models/resnet_101/1/variables/variables.index [Content-Type=application/octet-stream]...

- [3 files][173.7 MiB/173.7 MiB]

Operation completed over 3 objects/173.7 MiB.

gsutil cp -r gs://workshop-datasets/models/resnet_50 gs://{MODEL_BUCKET}

Copying gs://workshop-datasets/models/resnet_50/1/saved_model.pb [Content-Type=application/octet-stream]...

Copying gs://workshop-datasets/models/resnet_50/1/variables/variables.data-00000-of-00001 [Content-Type=application/octet-stream]...

Copying gs://workshop-datasets/models/resnet_50/1/variables/variables.index [Content-Type=application/octet-stream]...

\ [3 files][ 99.4 MiB/ 99.4 MiB]

Operation completed over 3 objects/99.4 MiB.

Config Map

Update the config map files with the your bucket name, use cloud editor.

- File 1: tfserving-canary/tf-serving/configmap-resnet101.yaml

- File 2: tfserving-canary/tf-serving/configmap-resnet50.yaml

# Copyright 2020 Google Inc. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: ConfigMap

metadata: # kpt-merge: /resnet50-configs

name: resnet50-configs

data:

MODEL_NAME: image_classifier

MODEL_PATH: gs://vf-core-1-bucket/resnet_50 # HERE HERE

Model deployment has 3 steps,

- Configuring the deployment via configmap-*.yaml file This file has SavedModel location and name

- Deploy the model using deployment-*.yaml file This file has specs for containers, ports, replica details etc

- Expose the deployed model as a service using service.yaml This step exposes a stable IP address and DNS name.

kubectl apply -f tf-serving/configmap-resnet50.yaml

- configmap/resnet50-configs created

kubectl apply -f tf-serving/deployment-resnet50.yaml

- deployment.apps/image-classifier-resnet50 created

kubectl get deployments NAME READY UP-TO-DATE AVAILABLE AGE image-classifier-resnet50 1/1 1 1 27s

# service.yaml

apiVersion: v1

kind: Service

metadata:

name: image-classifier

namespace: default

labels:

app: image-classifier

service: image-classifier

spec:

type: ClusterIP

ports:

- port: 8500

protocol: TCP

name: tf-serving-grpc

- port: 8501

protocol: TCP

name: tf-serving-http

selector:

app: image-classifier

The selector field refers to the app: image-classifier label.

What it means is that the service will load balance across all pods annotated

with this label. At this point these are the pods comprising the ResNet50

deployment. The service type is ClusterIP. The IP address exposed by the

service is only visible within the cluster.

- GCloud QWik Labs

kubectl apply -f tf-serving/service.yaml service/image-classifier created

Configuring Istio Ingress Gateway

Istio Ingress gateway manages inbound and outbount traffic for the service mesh.

kubectl apply -f tf-serving/gateway.yaml

- gateway.networking.istio.io/image-classifier-gateway created

Virtual services, along with destination rules are the key building blocks

of Istio’s traffic routing functionality. A virtual service lets you configure

how requests are routed to a service within an Istio service mesh. Each

virtual service consists of a set of routing rules that are evaluated in

order, letting Istio match each given request to the virtual service to a

specific real destination within the mesh.

- GCloud, QWikLabs

kubectl apply -f tf-serving/virtualservice.yaml

- virtualservice.networking.istio.io/image-classifier created

Access ResNet50 Model

export INGRESS_HOST=(kubectl -n istio-system get service istio-ingressgateway -o jsonpath=’{.status.loadBalancer.ingress[0].ip}')

export INGRESS_PORT=(kubectl -n istio-system get service istio-ingressgateway -o jsonpath=’{.spec.ports[?(@.name==“http2”)].port}')

export GATEWAY_URL=INGRESS_HOST:INGRESS_PORT

echo GATEWAY_URL

- 34.123.5.65:80

- Image Credit: What Is a Service Mesh?

curl -d @payloads/request-body.json -X POST http://{GATEWAY_URL}/v1/models/image_classifier:predict

#CURL Output

{

"predictions": [

{

"labels": ["military uniform", "pickelhaube", "suit", "Windsor tie", "bearskin"],

"probabilities": [0.453408211, 0.209194973, 0.193582058, 0.0409308933, 0.0137334978]

}

]

}

Deploying ResNet101 as a Canary Release

weight selector provides the route splitting information.

# virtualservice-weight-100.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: image-classifier

spec:

hosts:

- "*"

gateways:

- image-classifier-gateway

http:

- route:

- destination:

host: image-classifier

subset: resnet50

port:

number: 8501

weight: 100

- destination:

host: image-classifier

subset: resnet101

port:

number: 8501

weight: 0

kubectl apply -f tf-serving/virtualservice-weight-100.yaml

- virtualservice.networking.istio.io/image-classifier configured

kubectl apply -f tf-serving/configmap-resnet101.yaml

- configmap/resnet101-configs created

kubectl apply -f tf-serving/deployment-resnet101.yaml

- deployment.apps/image-classifier-resnet101 created

kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE image-classifier-resnet101 0/1 1 0 8m35s image-classifier-resnet50 1/1 1 1 21m

Routing Split 70/30

# virtualservice-weight-70.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: image-classifier

spec:

hosts:

- "*"

gateways:

- image-classifier-gateway

http:

- route:

- destination:

host: image-classifier

subset: resnet50

port:

number: 8501

weight: 70

- destination:

host: image-classifier

subset: resnet101

port:

number: 8501

weight: 30

Routing Split by User Group

user-group selector specifies which user group to use which model. Here canary user group is routed to resnet 101.

#virtualservice-focused-routing.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: image-classifier

spec:

hosts:

- "*"

gateways:

- image-classifier-gateway

http:

- match:

- headers:

user-group:

exact: canary

route:

- destination:

host: image-classifier

subset: resnet101

port:

number: 8501

- route:

- destination:

host: image-classifier

subset: resnet50

port:

number: 8501

curl -d @payloads/request-body.json -H “user-group: canary” -X POST http://GATEWAY_URL/v1/models/image_classifier:predict

Cleanup

gcloud container clusters delete canary-cluster

gsutil rm -r gs://vf-core-1-bucket

> Removing gs://vf-core-1-bucket/resnet_101/1/saved_model.pb#1634378699740657...

> Removing gs://vf-core-1-bucket/resnet_101/1/variables/variables.data-00000-of-00001#1634378700339813...

> Removing gs://vf-core-1-bucket/resnet_101/1/variables/variables.index#1634378700932108...

> Removing gs://vf-core-1-bucket/resnet_50/1/saved_model.pb#1634378703445890...

> / [4 objects]

> ==> NOTE: You are performing a sequence of gsutil operations that may

> run significantly faster if you instead use gsutil -m rm ... Please

> see the -m section under "gsutil help options" for further information

> about when gsutil -m can be advantageous.

Removing gs://vf-core-1-bucket/resnet_50/1/variables/variables.data-00000-of-00001#1634378704035172...

Removing gs://vf-core-1-bucket/resnet_50/1/variables/variables.index#1634378704635678...

/ [6 objects]

Operation completed over 6 objects.

Removing gs://vf-core-1-bucket/...

Conclusion

MLOps is a critical area in machine learning and AI development. Right tools for the right job at right time will significantly save our energy, reduce anxiety and eventually lead to a sound sleep at night. For e.g, a Kubernetes cluster and TF serving are essentials and not a luxury when we think about drifts and continuous integration. Istio service mesh makes our job easy by avoiding one more codebase for an application layer for serving(Flask and FastAPI are the popular candidates). Further, the Canary deployment strategy enables us to make quick upgrades and easy rollback when things go wrong.

This post is a long pending one, MLOps is a huge topic - I guess I will be writing more on it mainly focusing on automation strategies. Hope you all benefit from this post.

References

- Implementing Canary releases of TensorFlow model deployments with Kubernetes and Istio from GCP

- What is Istio? from Istio

- Using the SavedModel format from Tensorflow Documentation

- Canary Deployment from Split.io

- What is a Canary Release? by Launch Darkly, 2021

- Service Mesh from Wikipedia

History

Dump of most of the commands I executed in the cloud shell today.

407 gcloud auth list

408 gcloud config set account `ACCOUNT`

409 gcloud config set account **\*\***\***\*\***@gmail.com

410 gcloud config list project

411 pwd

412 cd

413 pwd

414 kpt pkg get https://github.com/GoogleCloudPlatform/mlops-on-gcp/workshops/mlep-qwiklabs/tfserving-canary-gke tfserving-canary

415 ls

416 cd tfserving-canary/

417 gcloud config set compute/zone us-central1-f

418 PROJECT_ID=$(gcloud config get-value project)

419 CLUSTER_NAME=canary-cluster

420 gcloud beta container clusters create $CLUSTER_NAME --project=$PROJECT_ID --addons=Istio --istio-config=auth=MTLS_PERMISSIVE --cluster-version=latest --machine-type=n1-standard-4 --num-nodes=3

421 gcloud enable container.googleapis.com

422 gcloud services enable container.googleapis.com

423 gcloud beta container clusters create $CLUSTER_NAME --project=$PROJECT_ID --addons=Istio --istio-config=auth=MTLS_PERMISSIVE --cluster-version=latest --machine-type=n1-standard-4 --num-nodes=3

424 gcloud compute project-info describe --project $PROJECT_ID

425 gcloud compute regions describe region-name

426 gcloud compute regions describe us-central1-f

427 gcloud compute regions describe us-central1

428 gcloud config set compute/zone us-central1

429 gcloud config set compute/zone us-central1-f

430 gcloud beta container clusters create $CLUSTER_NAME --project=$PROJECT_ID --addons=Istio --istio-config=auth=MTLS_PERMISSIVE --cluster-version=latest --machine-type=n1-standard-4 --num-nodes=3

431 gcloud beta container clusters create $CLUSTER_NAME --project=$PROJECT_ID --addons=Istio --istio-config=auth=MTLS_PERMISSIVE --cluster-version=latest --machine-type=n1-standard-4 --num-nodes=2

432 gcloud container clusters get-credentials $CLUSTER_NAME

433 kubectl get service -n istio-system

434 kubectl get pods -n istio-system

435 kubectl label namespace default istio-injection=enabled

436 export MODEL_BUCKET=${PROJECT_ID}-bucket

437 gsutil mb gs://${MODEL_BUCKET}

438 gsutil cp -r gs://workshop-datasets/models/resnet_101 gs://${MODEL_BUCKET}

439 gsutil cp -r gs://workshop-datasets/models/resnet_50 gs://${MODEL_BUCKET}

440 echo $MODEL_BUCKET

441 kubectl apply -f tf-serving/configmap-resnet50.yaml

442 kubectl apply -f tf-serving/deployment-resnet50.yaml

443 kubectl get deployments

444 kubectl apply -f tf-serving/service.yaml

445 kubectl apply -f tf-serving/gateway.yaml

446 kubectl apply -f tf-serving/virtualservice.yaml

447 export INGRESS_HOST=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

448 export INGRESS_PORT=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.spec.ports[?(@.name=="http2")].port}')

449 export GATEWAY_URL=$INGRESS_HOST:$INGRESS_PORT

450 echo $GATEWAY_URL

451 curl -d @payloads/request-body.json -X POST http://$GATEWAY_URL/v1/models/image_classifier:predict

452 ls

453 echo $GATEWAY_URL

454 kubectl apply -f tf-serving/virtualservice-weight-100.yaml

455 kubectl apply -f tf-serving/configmap-resnet101.yaml

456 kubectl apply -f tf-serving/deployment-resnet101.yaml

457 curl -d @payloads/request-body.json -X POST http://$GATEWAY_URL/v1/models/image_classifier:predict

458 kubectl apply -f tf-serving/virtualservice-weight-70.yaml

459 curl -d @payloads/request-body.json -X POST http://$GATEWAY_URL/v1/models/image_classifier:predict

460 kubectl apply -f tf-serving/destinationrule.yaml

461 curl -d @payloads/request-body.json -X POST http://$GATEWAY_URL/v1/models/image_classifier:predict

462 kubectl apply -f tf-serving/virtualservice-weight-100.yaml

463 kubectl apply -f tf-serving/configmap-resnet101.yaml

464 kubectl apply -f tf-serving/deployment-resnet101.yaml

465 curl -d @payloads/request-body.json -X POST http://$GATEWAY_URL/v1/models/image_classifier:predict

466 kubectl apply -f tf-serving/virtualservice-weight-70.yaml

467 curl -d @payloads/request-body.json -X POST http://$GATEWAY_URL/v1/models/image_classifier:predict

468 kubectl get deployments

469 kubectl apply -f tf-serving/virtualservice-focused-routing.yaml

470 curl -d @payloads/request-body.json -X POST http://$GATEWAY_URL/v1/models/image_classifier:predict

471 curl -d @payloads/request-body.json -H "user-group: canary" -X POST http://$GATEWAY_URL/v1/models/image_classifier:predict

472 kubectl get deployments

473 kubectl apply -f tf-serving/deployment-resnet101.yaml

474 history